XR

Research

ILM Stagecraft

Made by Industrial Light and Magic

When filming season 1 of The Mandalorian, the crew used large LED walls to project 3D environments made using Unreal Engine. The environments were then tracked to the movement of the camera, which meant that the environment would move at the same time as the camera’s to create the effect of being on a real set. This mixed reality approach had a few benefits to the filming compared to a green screen set.

- The actors were able to immerse themselves much more easily.

- Reflective material (like the Mandalorian’s helmet) would be able to reflect the actual environment being used, which saves a ton of time in post-production.

- The crew could adjust the environments on the fly during the filming, ranging from adjustments in the lighting, weather conditions, and virtual props.

Google Lens

Made by Google https://lens.google

Here's an example of an AR app I personally use all the time. Google Lens is very similar to regular Google, but instead of entering a search using words you take a picture of anything using the app. Lens then uses its machine learning capabilities to compare your image to other images on the internet in order to determine what the picture is. It can be used to identify objects like plants, barcodes and buildings, translate in real-time, solve math problems and more.

Recently, I used Google Lens to identify a weird plant I found in my back garden in order to check if it was toxic or poisonous in any way, since we have cats in the house that might want to eat it. I took a picture of the plant with Lens and found out that it was called Potentilla Indica, more commonly known as a mock strawberry because of the similarities between the two plants. The plant is apparently not dangerous at all, but I wouldn't have known that if it wasn't for Google Lens.

Snapchat Lenses

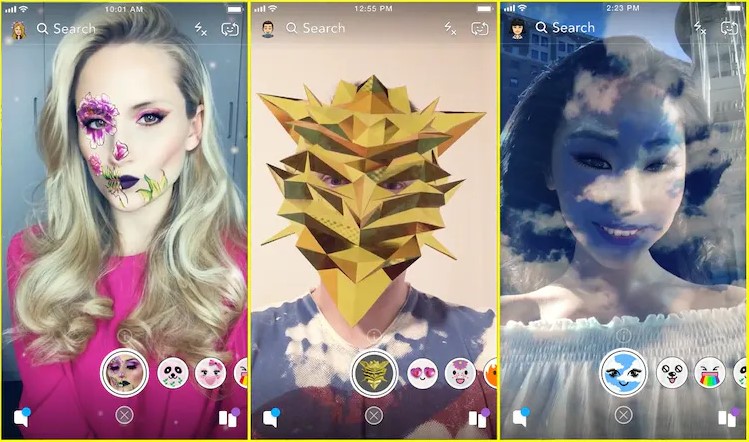

Snapchat's face filters stand out to me as one of the biggest, if not the biggest commercial success in the world of XR technology in terms of impact on a global scale. It introduced augmented reality to a whole demographic of people that might not have ever been interested in it otherwise, and it was one of the first companies to work with AR in this way. The filters, or Lenses as Snapchat calls them, use pixel data from your camera to detect and locate facial features and aligns it with a virtual model of a face. This model can then be used to do just about anything, like deforming the mask to change your face shape or eye color, adding features like the now infamous dog nose and ears or set animations to trigger when you open your mouth or raise your eyebrows.

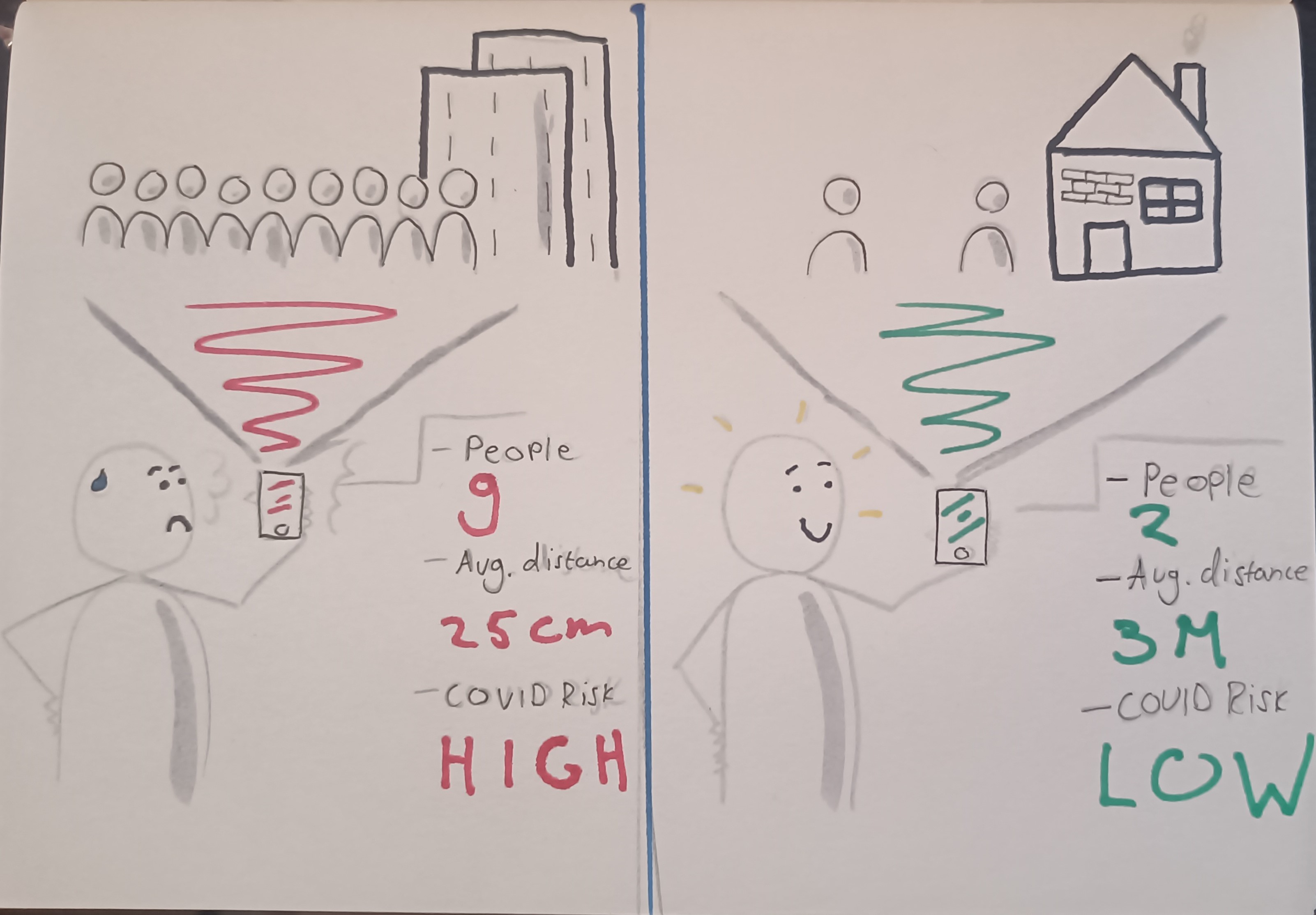

Concept SafeZone

SafeZone is an AR app that helps keep their users safe from COVID in crowded areas. The app allows its users to calculate and visualise the COVID risk of any given area in real time. SafeZone scans an area using the camera to record the amount of people and the average distance between people. This, combined with local medical data, is then used to determine whether the area the user is looking at is safe to be in. The app displays this in the phone's camera view to visualize the risk the user has of contracting COVID by being in the scanned area.

Reflection

I think XR is a very intriguing topic with a lot of potential, but I also think the people that talk about its countless practical applications and powerful capabilities to immerse are kind of undermining the fact that the technology isn't that far along yet. The tech we have right now is expensive and kind of clunky, and at the moment I think that for most consumers and companies it's just too much of an inconvenience to justify implementing it into their daily lives. Ignoring all of this though, I think that XR has a very bright future, as long as there are enthusiasts out there that are thinking of new and creative ways to use the technology.