Lab weeks

Process

Week 1 - Day 1 - Introductions

First morning, starting off. For our teambuilding exercise, we had to get to know each other, find out why we were put into a group and make a physical logo for our group. We couldn’t really find any commonalities besides the fact that we all spoke Dutch and some of us had a pet. We decided that our logo was going to be a mix of the pets we had, a cat and a chicken. This kind of led right into our idea for the artificial creature we were going to make.

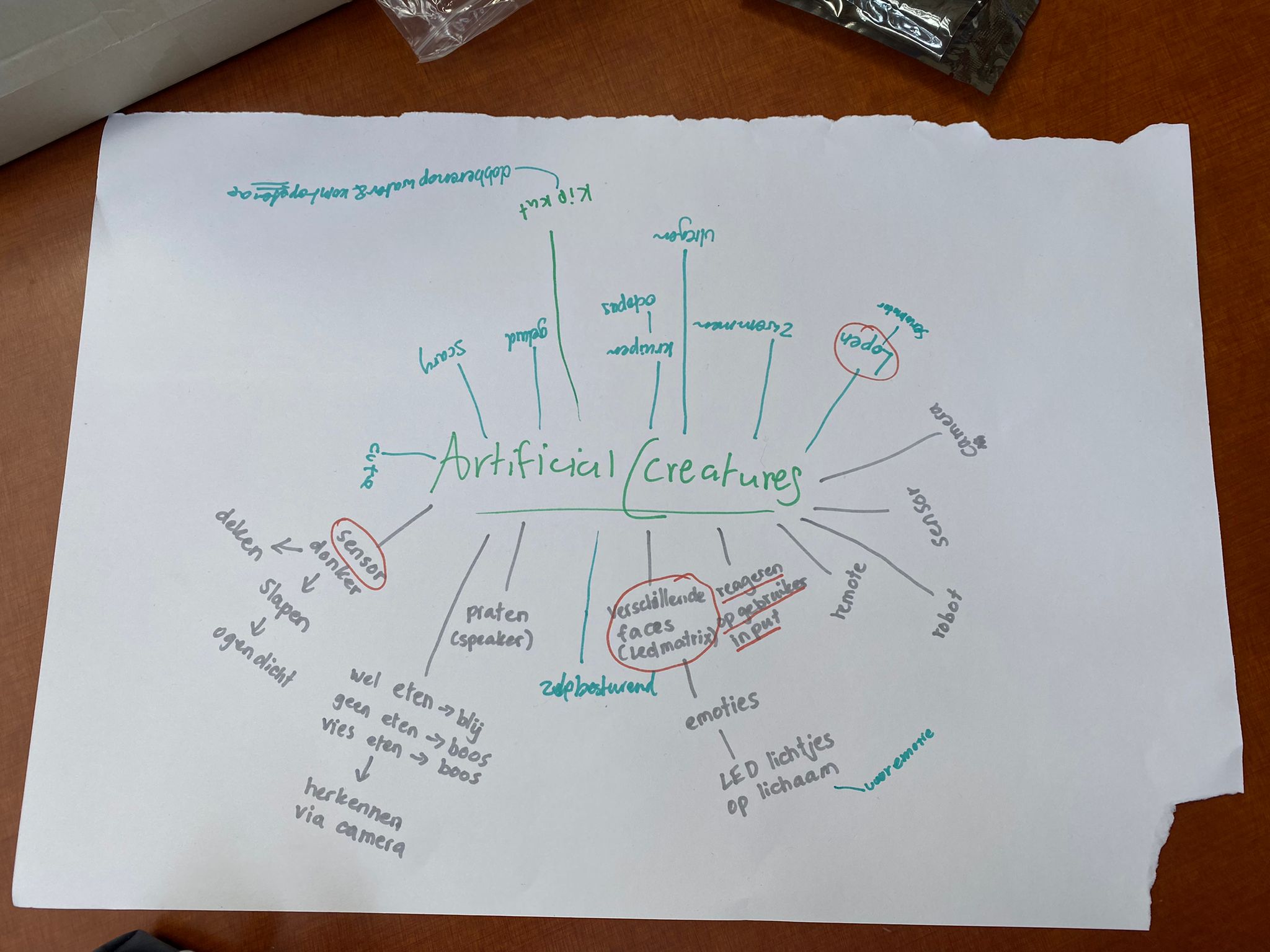

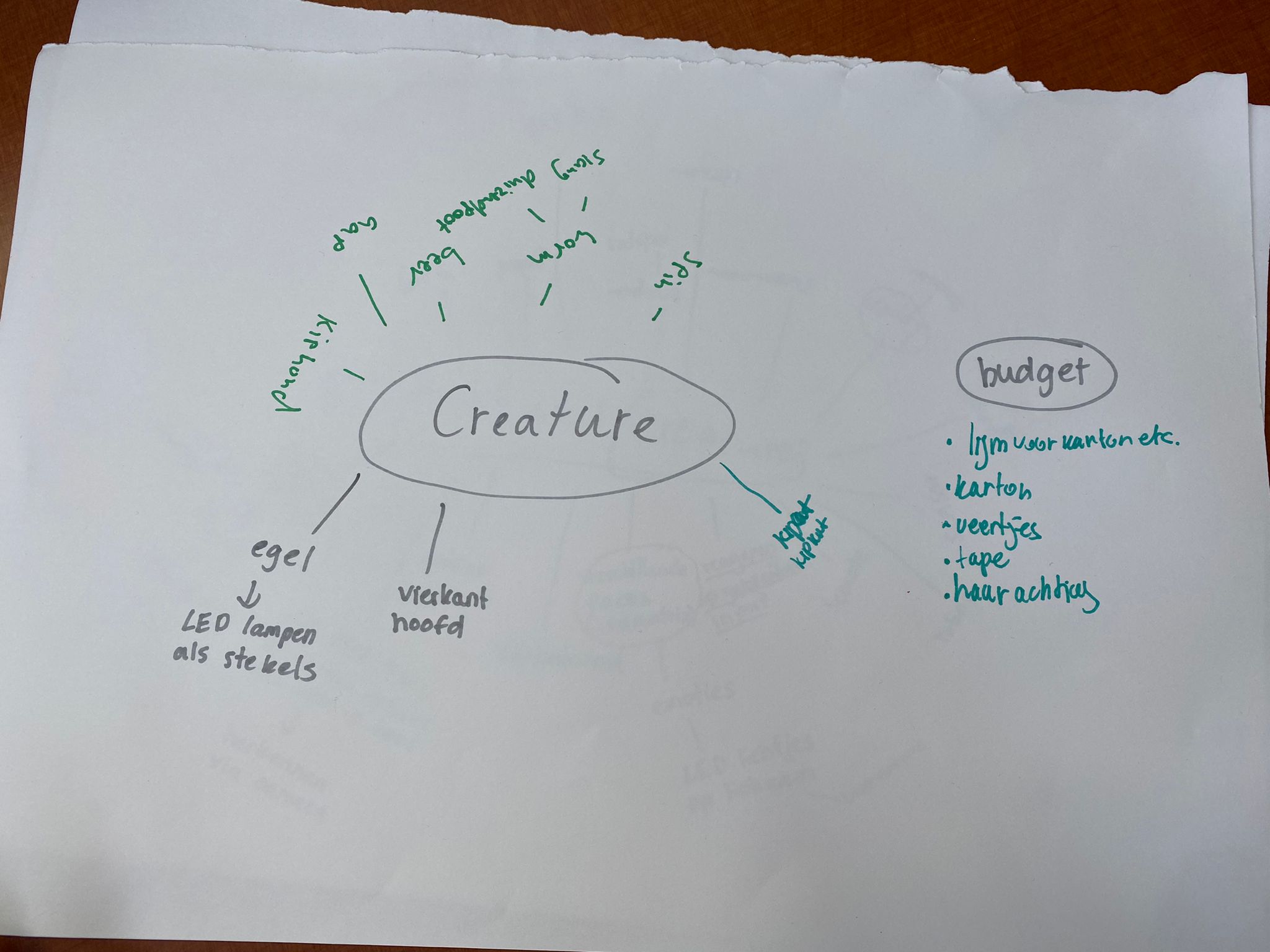

We started off our project by brainstorming some possible creatures and functions.

Once we knew what functions we definitely wanted to use, we broke up into two teams and started working on walking and eye movement. Me and Yustin worked on getting something to walk using four micro servo motors while May, Tessa and Allyshia started working on the LED matrix and programming eye movement. My work started by looking at some other projects online using servos and we adjusted some code we found online. Once we wired up the servo’s we stuck them to a phone case using some tape and we had our first walking prototype.

A problem we had with this prototype was that only three of the four servo's were moving at the same time. Initially we thought one of the servo’s was broken, which wasn’t the case after testing a bit. We tried different configurations of code and we were able to control each servo fine, just not all four at the same time.

(video)

We then thought it had something to do with the amount of power the Arduino was able to provide to each servo, so we split the load in two by connecting another Arduino to the circuit. This worked but it was only a temporary fix.

Eventually we just increased the delay between each step so the Arduino had more time to process the inputs, which worked. This was an adequate solution.

(video)

It couldn’t walk very far because the phone case was too small to hold any of the other electronics (arduino, breadboard, powerbank) and the servo’s weren’t strong enough to support the weight of the electronics.

After getting the motion down with the phone case, we built a simple cardboard box that was able to hold the servo’s and other electronics.

We worked on these problems over the course of the day and made considerable progress with our first prototype, ending the day with a simple cardboard box body with stubby servo legs, and working code for leg movement and LED matrix animations for blinking, looking left & looking right. I also presented our cardboard servo box to the rest of the class at the end of the day.

(end of day 1 video)

Week 1 - Day 2 - Crawl before you can walk

Today we made worked further on the locomotion and interactability of the prototype (LED matrix and LED’s with light sensor).

I mostly worked with Yustin on the locomotion. This included making legs out of cardboard, attaching those to the servo’s on the body. We worked with knees too, but that proved to be 1) too power-consuming for the Arduino and 2) a lot of work.

(video)

We had trouble with power a lot today. We concluded that we need condensators and a motor shield in order to power multiple servos at once and bigger servos that support more weight. Being able to support more weight means that we’ll be able to fit the electronics into the body and transition to a sturdier prototype made of wood.

Week 1 - Day 3 - Feeling bad, start on sound

I wasn’t feeling very good today and I didn’t get a whole lot of work done. We did get materials to start working on the wooden body.

I also experimented with the piezo speaker to give our creature some life. I’m planning on using capacitive touch sensors to trigger the sounds alongside the odd noise every so often.

I also helped out with the head of our cardboard prototype a bit, fixing a problem we had where the eyes would stay closed as long as the tail was moving, which is no longer an issue

Week 1 - Day 4 - Everyone is sick :(

Today, most of our group was feeling kind of tired and under the weather, so we cut the day short. We did do some work on our wooden prototype though. I also started sketching out some designs for the beak of our creature. At one point we decided to start working with the ultrasonic distance sensors instead of the light sensors, which would be easier to trigger. We've been having a problem where the light values would obviously be changing throughout the day, so the value for when to trigger the eyes to move would have to change througout the day as well.

Week 2 - Day 1 - Working from home

So, over the weekend I found out that I have COVID, which explains my symptoms from day 2. Luckily no one else on the team got sick, which I'm very thankful for. This means that I'm going to be working from home this week, which is going to be a whole different kind of challenge.

Since I can't actually work on the physical prototype from home, I talked with the team over Whatsapp and we decided that I should work on our concept statement, which I've written out here (it's in Dutch.)

"De Kipkat is een hybride frankenstein-achtig schepsel die zich eenzaam, alleen en buitengesloten voelt. Hij kent niemand anders die ook zoals hem is en hij is altijd op zoek naar liefde, vrienden en aandacht van anderen. Heel veel van zijn vrienden rijden op wielen (andere artificial creature groepjes) en ze lachen hem altijd uit voor de rare manier waarop hij loopt."

After this, I started working on the beak. This means working with the piezo speaker and capacitive touch sensor. I looked at a lot of code, trying to figure out how to play melodies without having to use delay(). I thought this would be a good idea when we start working with multiple actuators on the same Arduino. This is because using delay() stops every single action on the Arduino when it's active, which means that triggering multiple actuators at the same time wouldn't be possible if the melodies are played with delay().

To fix this, I used some code I found online (the Arduino example code BlinkWithoutDelay.ino also does this) that uses millis() and tried to fiigure out how this worked exactly. I then tried to retrofit this millis() framework onto my previous code for the beak.

Week 2 - Day 2 - Touch sensors

Today I started work on the capacitive touch sensors, which turned out to be pretty hard (especially while only using materials I have lying around in my room).

Worked on touch sensors. very hard.

Week 2 - Day 3 - More experimenting with sound

I experimented more with different types of sounds, chirps and songs for the Kipkat. However, it's getting kind of hard to stay productive in quarantine without the team.

Week 2 - Day 4 - Making the beak

Today I made a beak.

I also did work on integrating my beak code into the code that the eyes and ears were running, but I later found out that this wasn't necessary since we were going to be running the beak's code on a separate Arduino.

Week 2 - Day 5: Expo

Today was the last day and my first day back in Maakhaven since last week. I got up early today in order to finish the code for the beak. When I got there we started working on integrating what I did at home with what the team did at Maakhaven. Besides that, we also did final testing and everything was going well. After lunch, we got back to Maakhaven and the expo was about to begin. Exactly as prophesised by Chris, Murphy's Law kicked in and things slowly started breaking. First, the servo legs started freaking out and breaking down, which was a real bummer. After a few hours of the expo, the touch sensor on the beak also stopped working. People seemed to be interested in the Kipkat though, I suppose since it looks kind of unique and weird. I had a lot of fun at the expo talking to people about our concept and they seemed genuinely interested in our broken, lonely little creature.

Product - Kipkat

The Kipkat is a lonely, attention seeking creature. It has a few sensors and actuators that give it a distinct personality.

- Servo motors allow the Kipkat to walk.

- Ultrasonic distance sensors can be triggered to activate LED-matrixes and servos on the Kipkat's head.

- A piezo speaker in the beak makes noises every so often to attract attention from people. The top of the beak is also equipped with a capacitive touch sensor, which allows the Kipkat to be pet. This triggers a sound on the piezo speaker.

Users can interact with the Kipkat by petting it on the sides of his head, which will trigger the eyes and ears to point towards your hand. Users can also pet Kipkat on its nose, which will trigger the beak to make cry out in happiness that someone has touched it.

Reflection

At the end of HCI I'm pretty pleased with how it all went, but there's definite room for improvement in our creature.

I think I did quite a lot of work during the lab weeks, both at Maakhaven and at home.

Looking back on it, I had a good time and I learned a lot too. My team was very friendly and cooperative.

Are you content with your product? Any why? Does it achieve what you set out to do? What is the quality of your product? Try to quantify/objectively express this Reflect on the process that you have gone through and compare your work/effort to that of the group How did you spend your time, tell something about this Were you motivated? Were your expectations met? Did you have a good time? Did you learn a lot? Why? Why not?